|

Liwei Wang

I am an Assistant Professor in Computer Science and Engineering department at The Chinese University of Hong Kong (CUHK). Before coming to HK, I have worked for more than two years as a Senior Researcher in Tencent AI Lab at Bellevue, US. Email / Google Scholar / Publications / Lab website (soon) / |

|

News

|

|

Recent Research Highlights

My students or interns are indicated by '*'. Click full publication list |

|

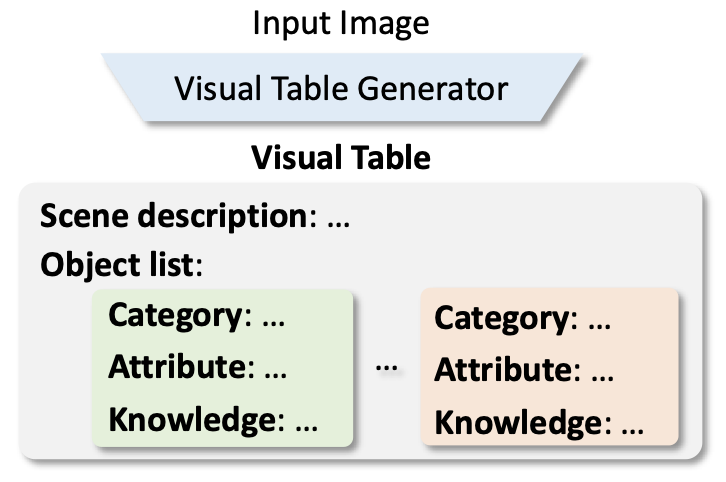

Beyond Embeddings: The Promise of Visual Table in Multi-Modal Models Arxiv 2024 |

|

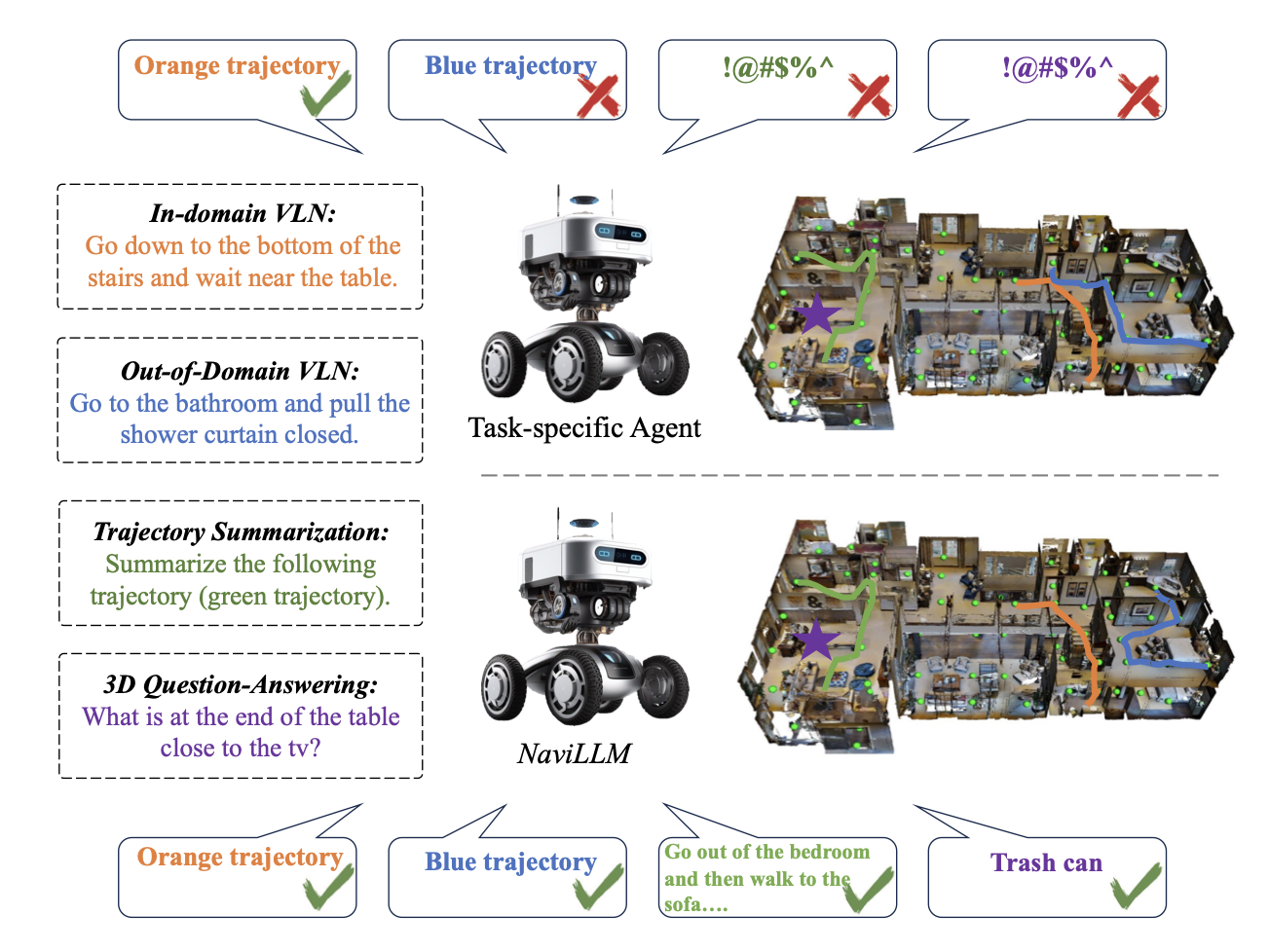

Towards Learning a Generalist Model for Embodied Navigation CVPR 2024 Code |

|

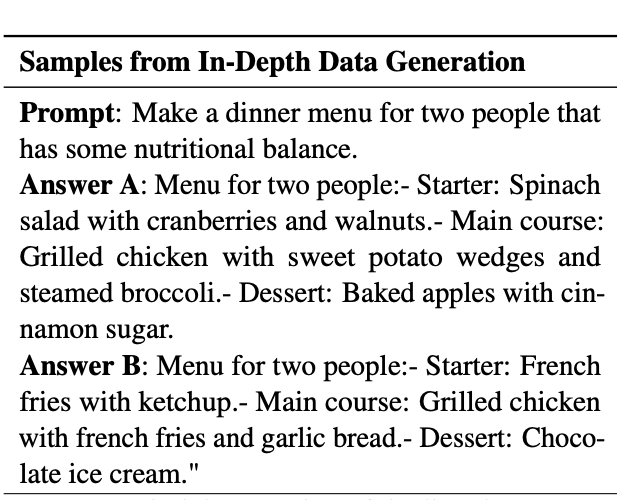

Learning Preference Model for LLMs via Automatic Preference Data Generation EMNLP 2023 Long Paper |

|

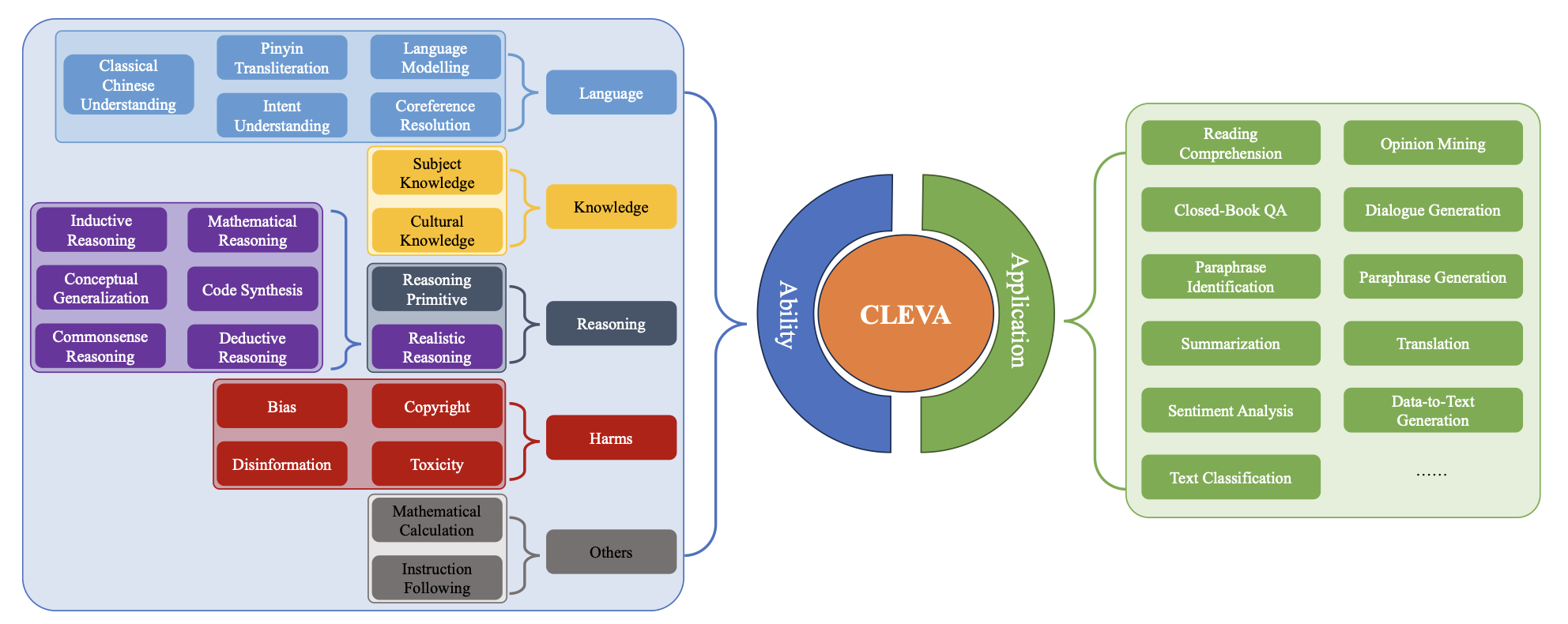

CLEVA: Chinese Language Models EVAluation Platform EMNLP 2023 System Demonstration Project |

|

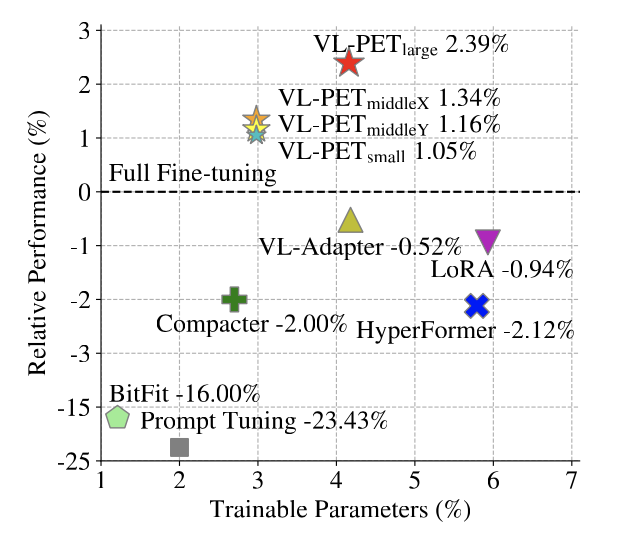

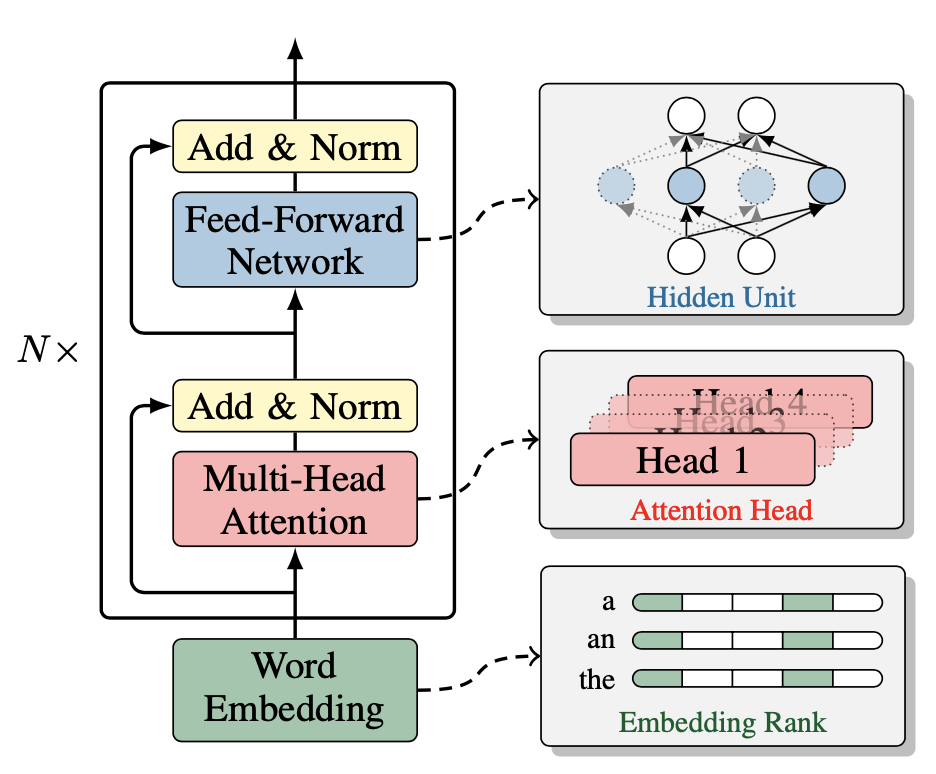

VL-PET: Vision-and-Language Parameter-Efficient Tuning via Granularity Control ICCV, 2023 Code |

|

Multi-View Transformer for 3D Visual Grounding CVPR, 2022 Code |

|

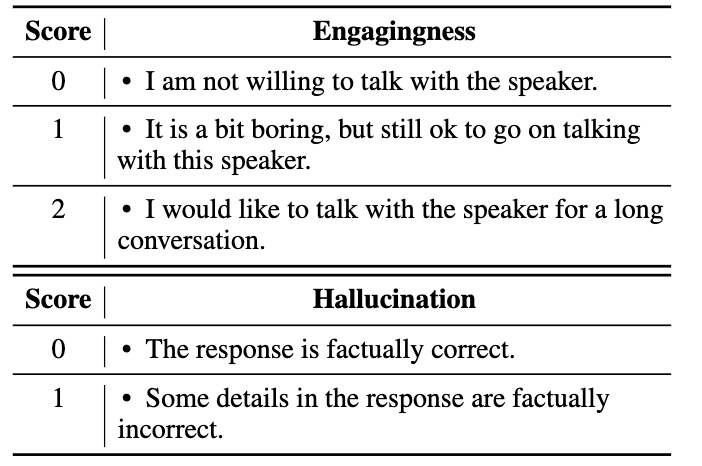

Eliciting Knowledge from Large Pre-Trained Models for Unsupervised Knowledge-Grounded Conversation EMNLP, 2022 Long Paper, Code |

|

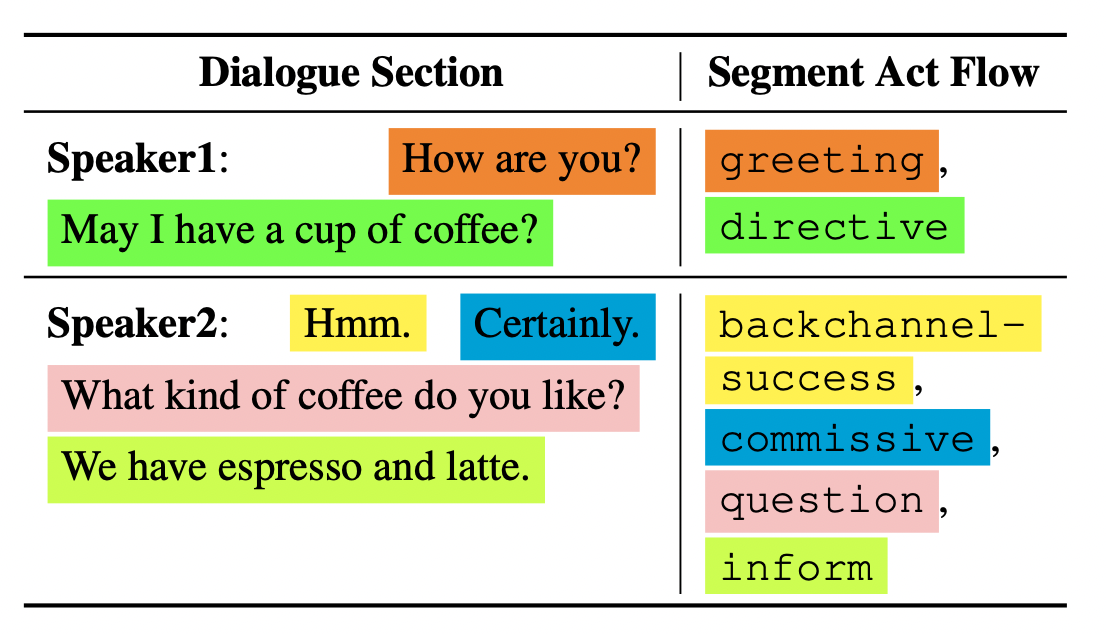

FlowEval: A Consensus-Based Dialogue Evaluation Framework Using Segment Act Flows EMNLP, 2022 Long Paper, Code and Dataset |

|

Probing Structured Pruning on Multilingual Pre-trained Models: Settings, Algorithms, and Efficiency ACL, 2022, long paper |

|

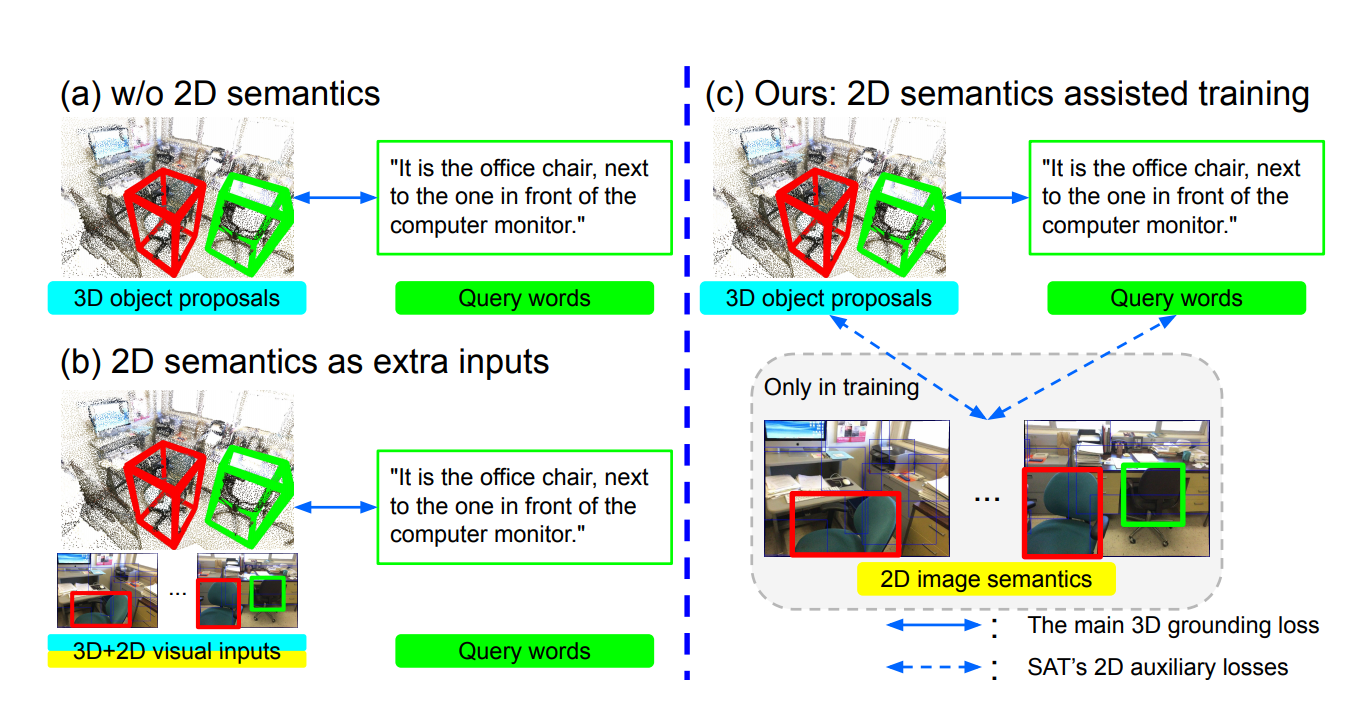

SAT: 2D Semantics Assisted Training for 3D Visual Grounding ICCV, 2021, Oral Presentation Code |

|

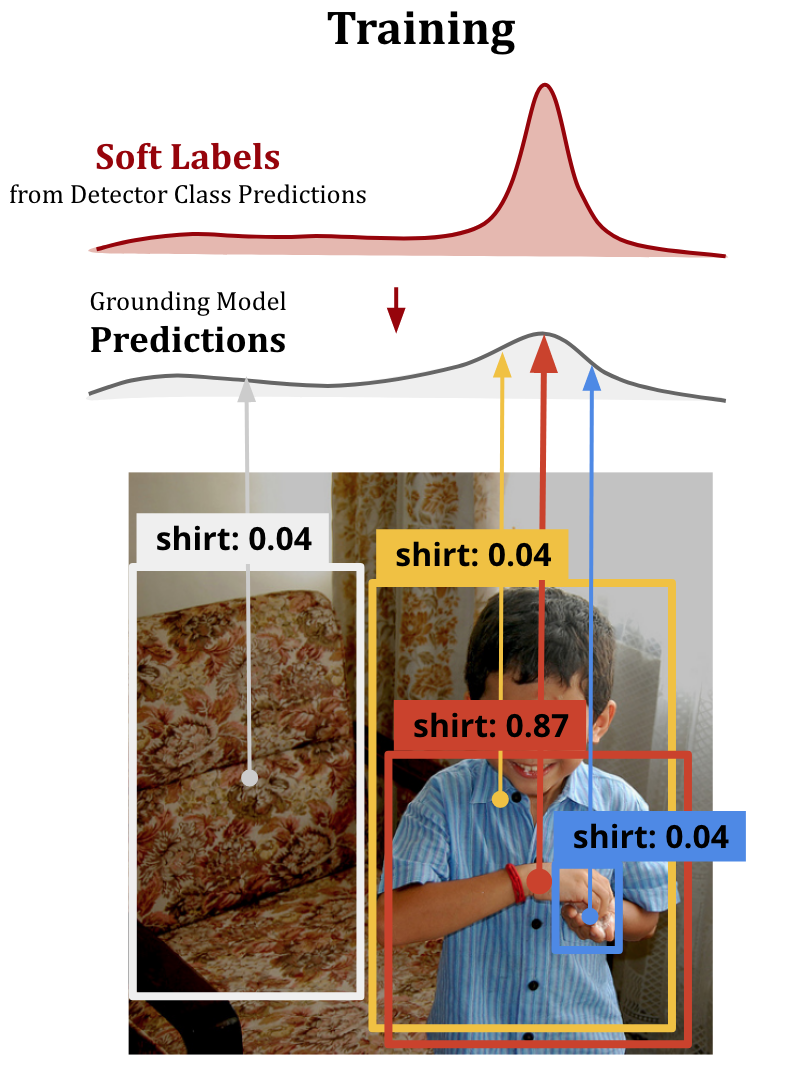

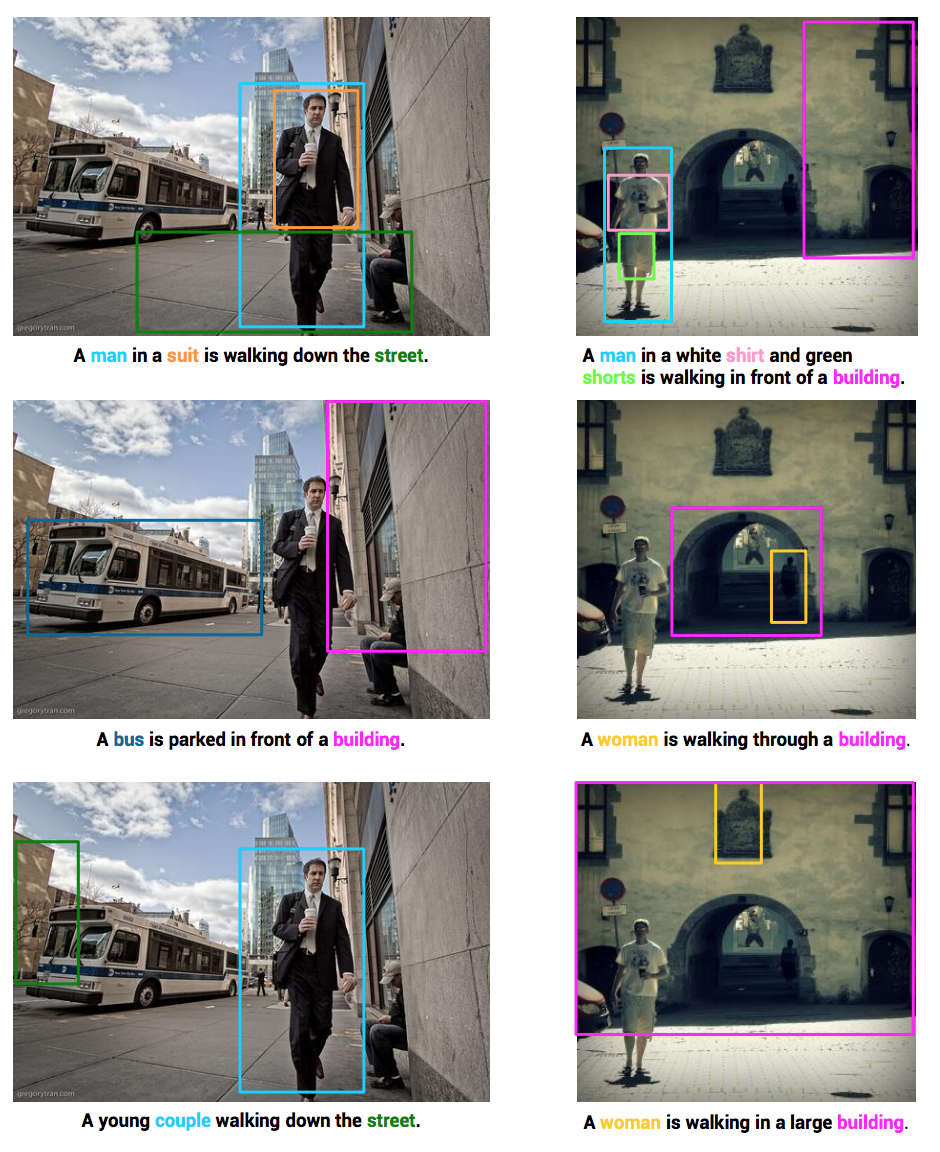

Improving Weakly Supervised Visual Grounding by Contrastive Knowledge Distillation CVPR, 2021 Code |

|

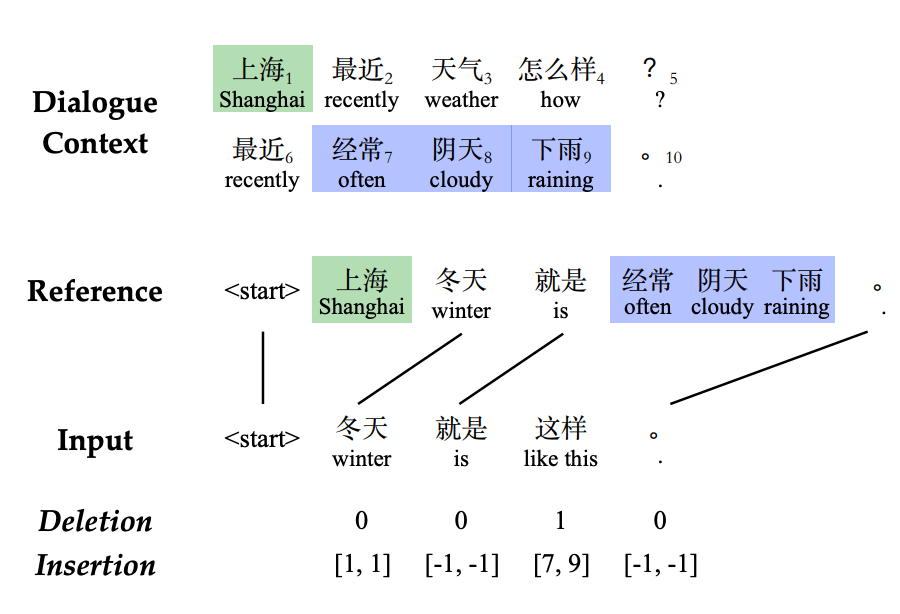

Robust Dialogue Utterance Rewriting as Sequence Tagging EMNLP, 2021, Code |

|

Comprehensive Image Captioning via Scene Graph Decomposition ECCV, 2020 Code |

|

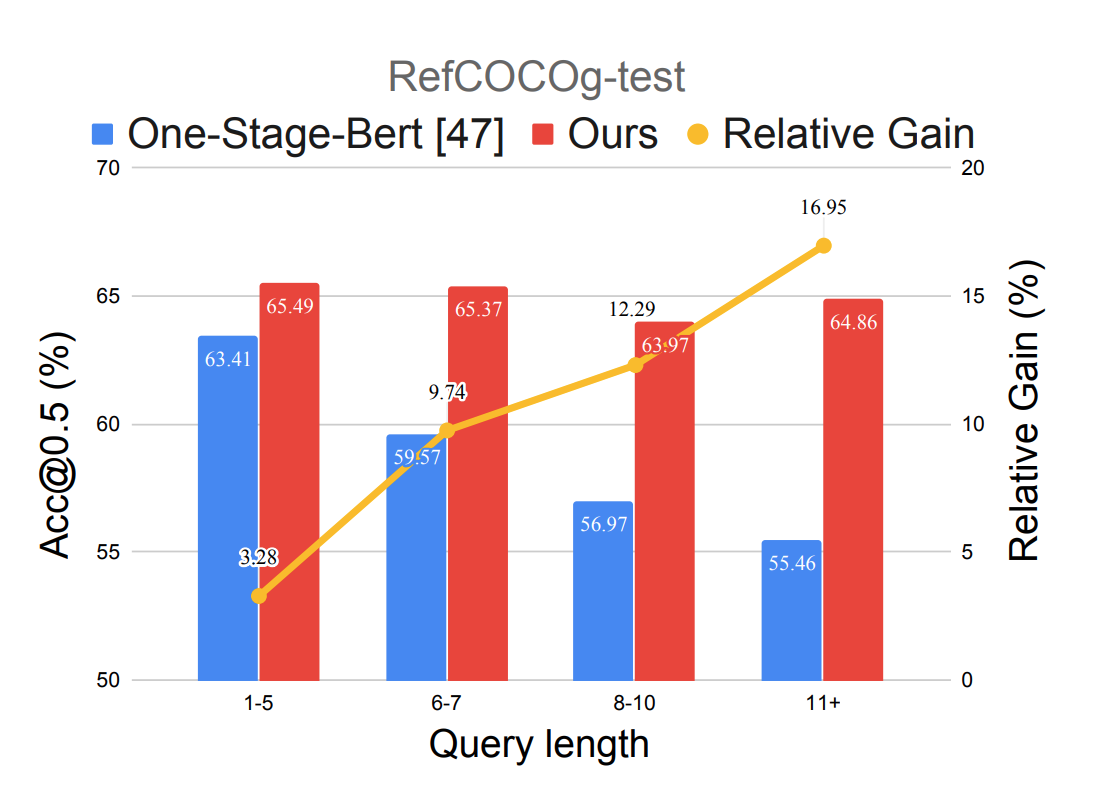

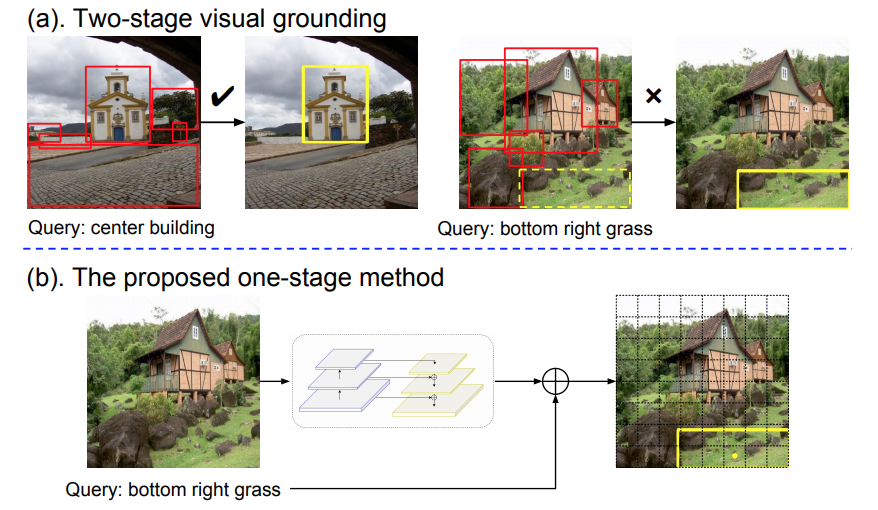

Improving One-stage Visual Grounding by Recursive Sub-query Construction ECCV, 2020 Code |

|

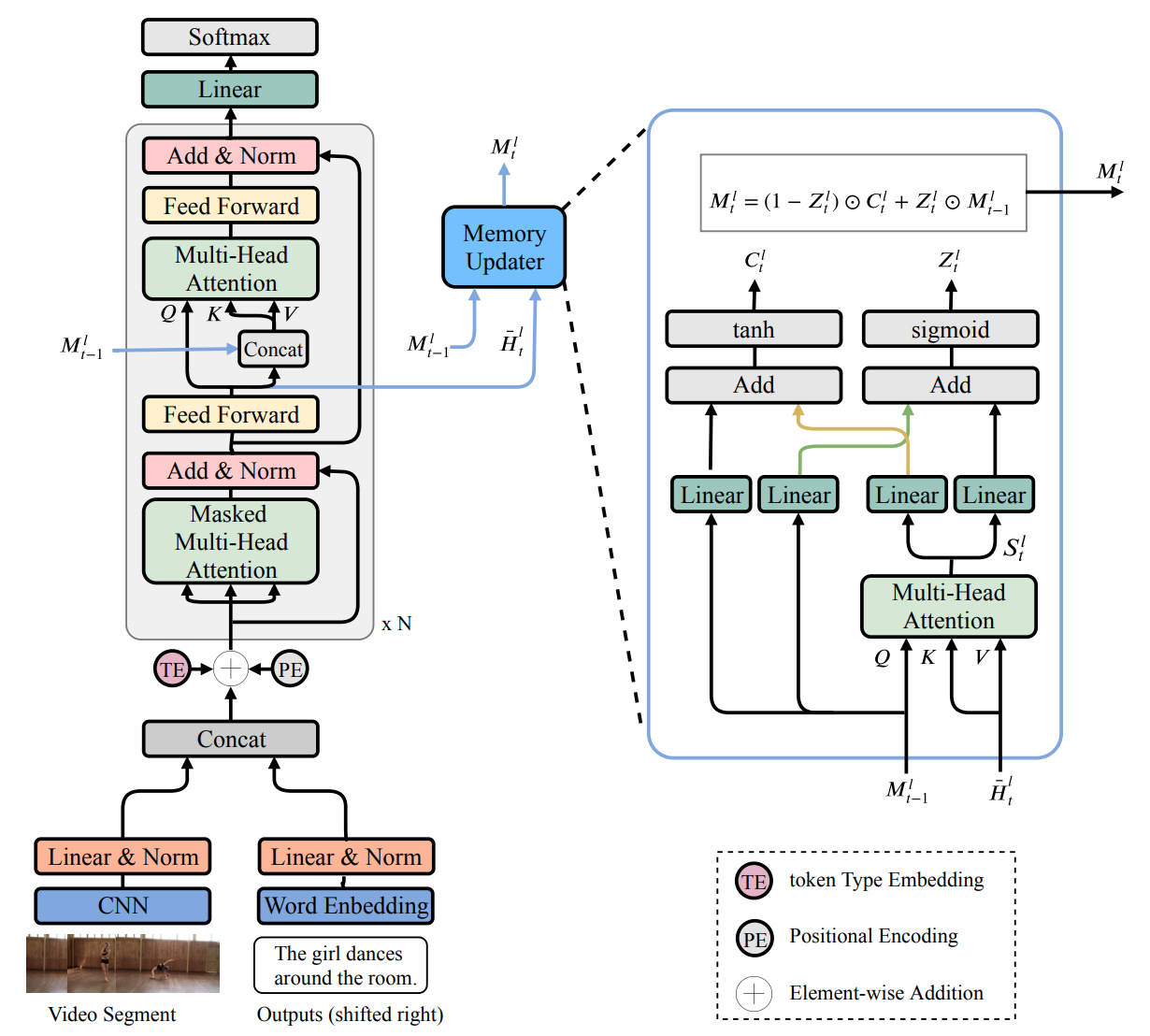

MART: Memory-Augmented Recurrent Transformer for Coherent Video Paragraph Captioning ACL, 2020 Code |

|

A Fast and Accurate One-Stage Approach to Visual Grounding ICCV, 2019, Oral Presentation Code |

|

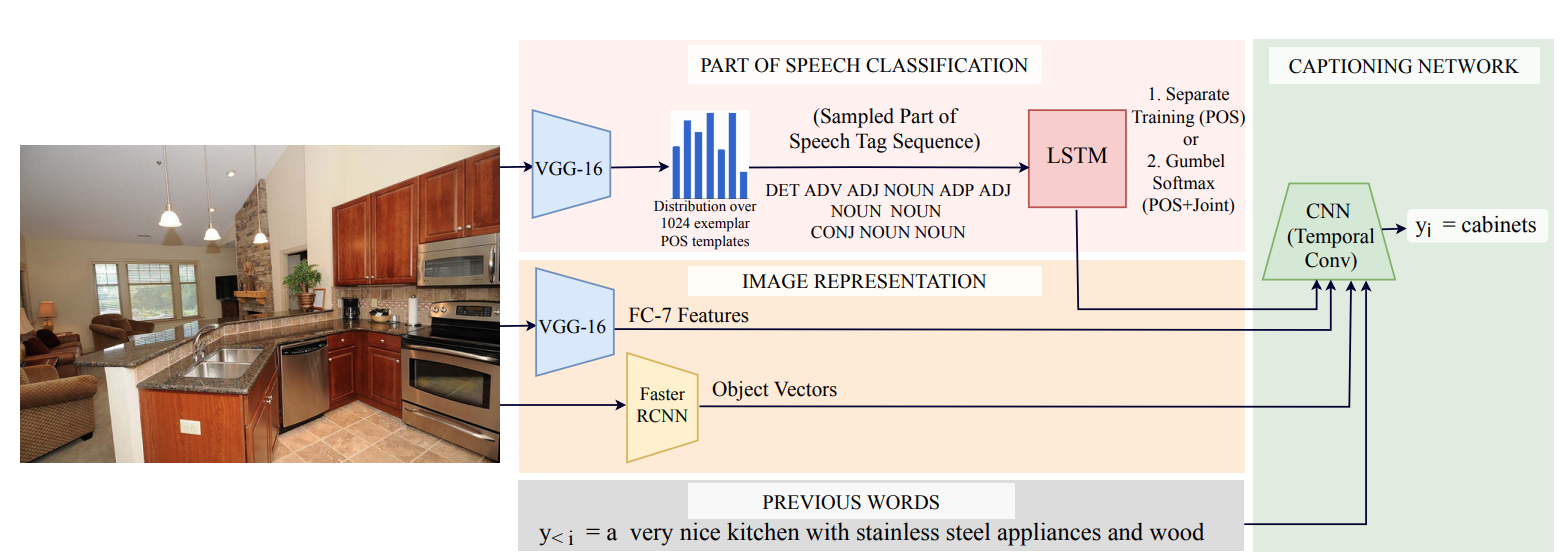

Fast, Diverse and Accurate Image Captioning Guided By Part-of-Speech CVPR, 2019, Oral Presentation |

|

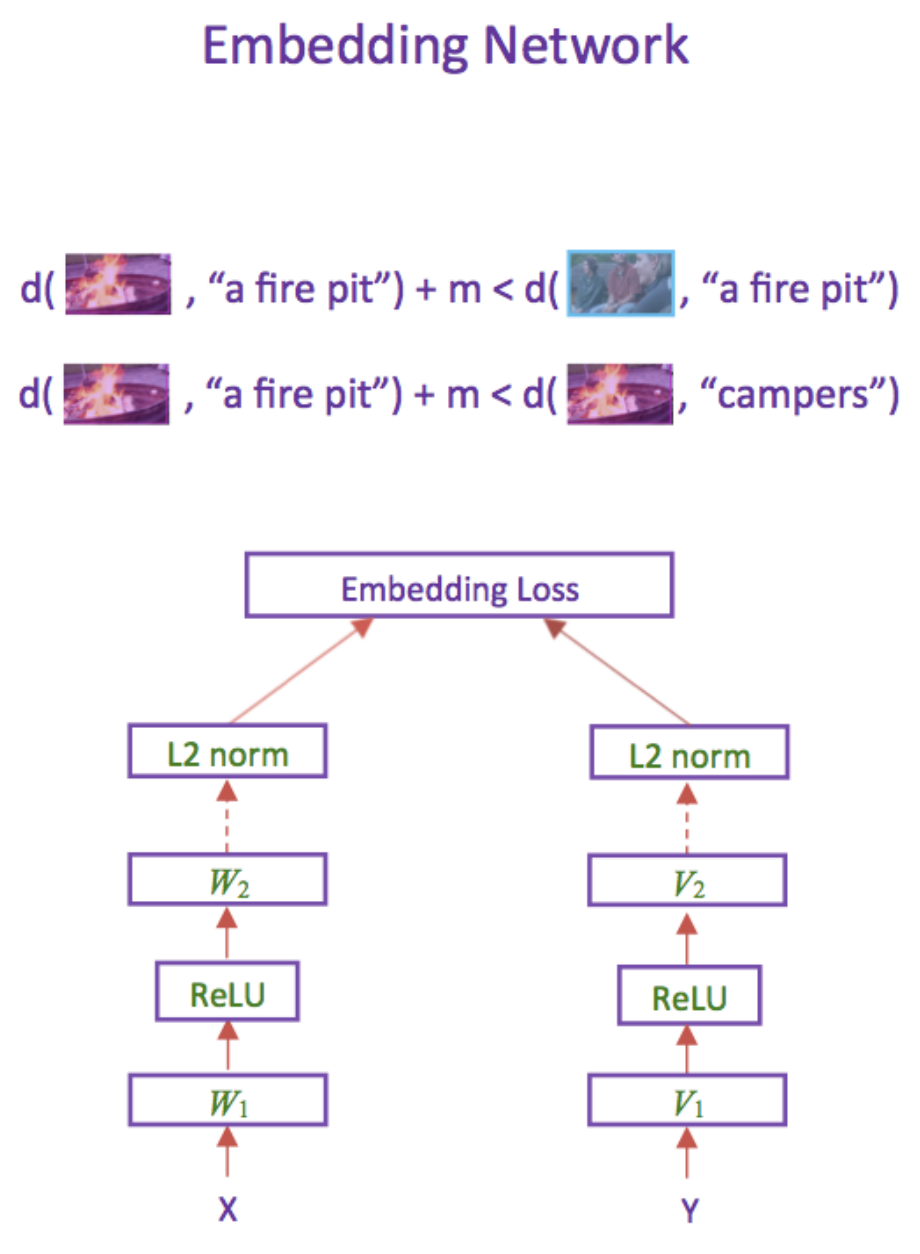

Learning Two-Branch Neural Networks for Image-Text Matching Tasks TPAMI, 2018 Code |

|

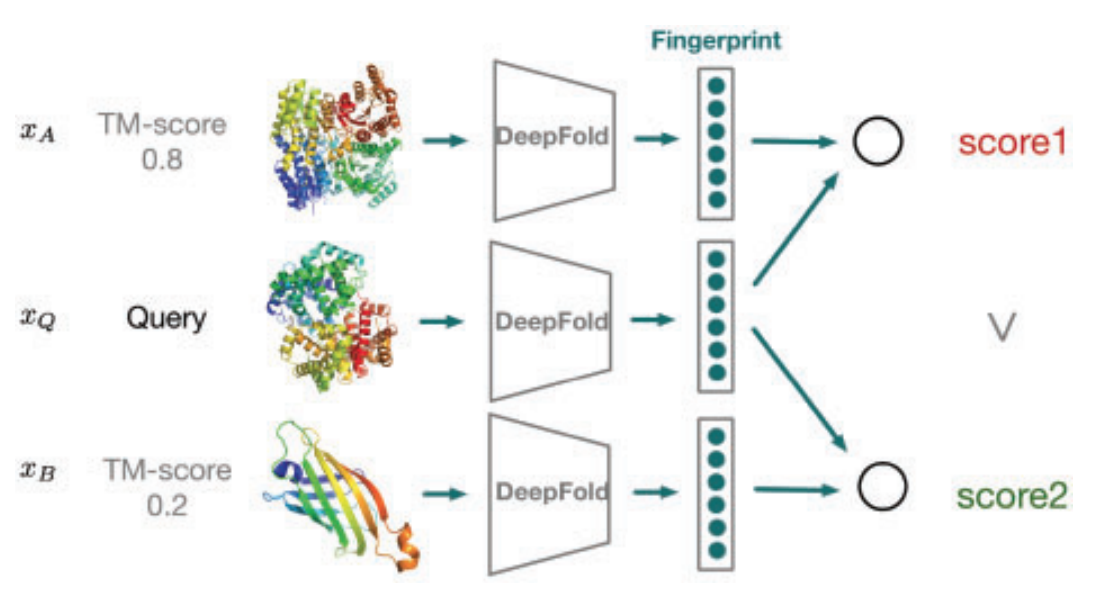

Learning structural motif representations for efficient protein structure search Bioinformatics, 2018 Code |

|

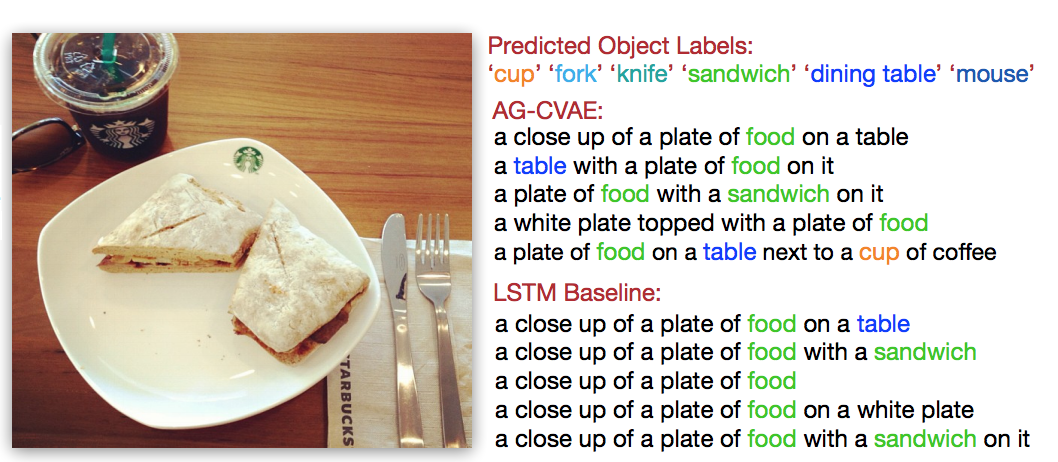

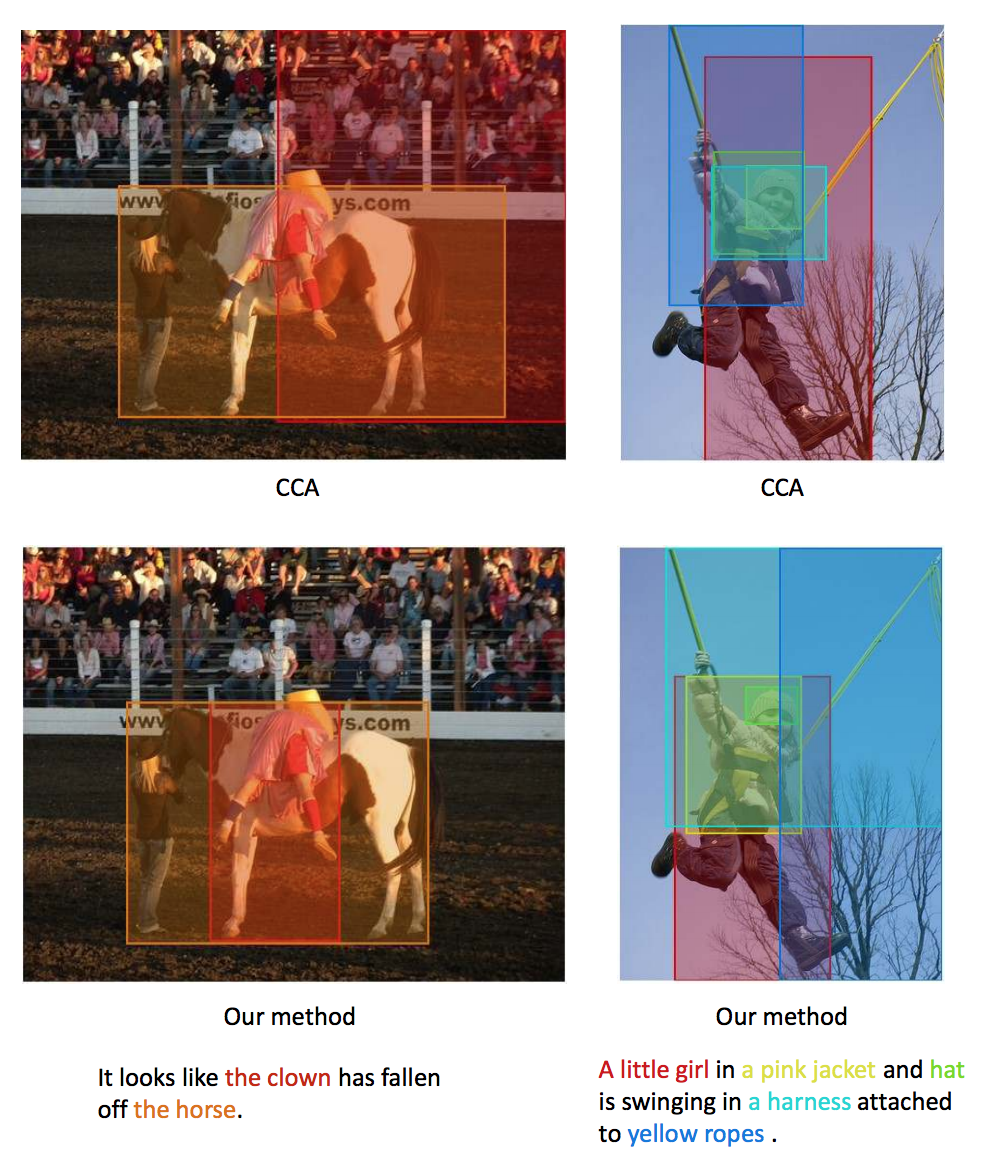

Liwei Wang,

Alex Schwing,

Svetlana Lazebnik NeurIPS, 2017 |

|

Flickr30k Entities: Collecting Region-to-Phrase Correspondences for Richer Image-to-Sentence Models IJCV, 2016 Project |

|

Learning Deep Structure-Preserving Image-Text Embeddings CVPR, 2016 Code |

|

|